A data center’s infrastructure consists of two tiers of infrastructure: the exterior structure and internal components.

The exterior infrastructure involves construction, external energy generation sources, and security. The internal infrastructure comprises computer systems, servers, cooling equipment, redundancy systems, and more.

The depth of your data center’s infrastructure depends on the extent of your operational functions. What can’t be denied is that if you don’t know your data center’s infrastructure details, then you don’t know much about your data center.

So, here is a list of the most common components of a data system infrastructure.

Need guidance developing an infrastructure for your data center? Contact C&C today.

Data Center Facilities

The typical data center can be housed almost anywhere. However, the bigger the company that relies on the data center, the more security is needed to protect the data center’s continuity, security, and integrity.

Many retail-level data centers, the kind which powers internet cafes, for example, are housed in buildings and malls.

Most of the data center facilities owned or contracted by corporate tech and social media companies are housed in fortified buildings. And sometimes, an entire building with many floors may comprise the data center.

Some data centers are located in multi-floor bunker structures, like the equivalent of a building underground. Other data center facilities are built and housed in hollowed parts of a mountain.

And then, there are some data centers housed in structures that are fully submerged in underwater caverns and cavities.

Why are data centers built into such places? Data centers are built into such impenetrable places as a security precaution. The data, proprietary information, and public and private secrets that are ferried back and forth would be at risk if data centers were built into easily accessible facilities.

The only people who would have access to facilities would be authorized personnel. Having data centers built into such impenetrable places increases security and reduces the effectiveness of corporate espionage.

Data centers are also built into such remote and impregnable areas to protect their integrity, hardware, and software from the ravages of the elements. Most of the time, when you hear about cloud computing, it is a reference to such remote off-site facilities that can’t be easily corrupted and infiltrated.

The building that houses a data center facility is constructed of the most expensive and durable construction materials. And the same goes for data centers located in mountains, underwater, and other clandestine locations.

Strategically placing data centers in clandestine and remote areas with extra-fortified construction materials protects them from blizzards, hurricanes, earthquakes, floods, heatwaves, hail, and weather events that could damage or destroy the facility.

Security Measures

Most people walk by a data center and never realize it, although many more are located far away in very remote and hard-to-access facilities.

Even if you knew the location of a high-volume data center, it would be impossible to get in. Most high-volume corporate data centers have an unseen but significant security infrastructure system protecting the data center. Beyond that, even if you have authorized access to the data center, the leading data center of the world also restricts the monitoring of every movement and action of every person within the walls. Because insider threat tools like InVue security products are implemented.

The security infrastructure posture for high-profile data centers may include:

- A security team.

- Video cameras.

- Security sensors.

- Audible and silent alarms.

- Other kinds of security measures.

Building-length data centers don’t have a window for protection purposes. However, not having physical windows can also create ventilation issues with data center equipment notorious for overheating. (More on that later).

And such facilities would feature the latest in state-of-the-art locked door mechanisms. One could not just walk into the entrance of a high-volume data center unless authorized.

Interior Infrastructure

Data centers require an extraordinary amount of energy to function. And as data centers increase in number worldwide, the amount of energy they need for operation will also exponentially increase.

In 2014, every data center in the United States used over 70 billion kilowatt-hours to power its operations. Over 6.4 million American households could have been powered with that amount of energy.

However, that record was shattered in 2017. Over 90 billion kilowatt-hours were used to power every data center within the United States during that year. And if that power consumption is being used to power data centers in the United States, imagine how much electricity is being used to power data centers worldwide.

The ethics of using so much energy to power data centers is an argument for another day. The issue is that using so much energy creates heat, blowout, and breakdown hazards for data center equipment.

Most traditional data centers use air-cooling towers or chilling equipment to transfer heat energy from overheating equipment. And air conditioning is also standard in such environments. The average temperature in a high-volume data center setting is anywhere between 68 degrees and 72 degrees Fahrenheit. That is usually the sweet spot temperature range to keep equipment and staffers cool.

While some data center tech equipment is designed to operate at 115 degrees Fahrenheit, there is no guarantee they or other components connected to it will malfunction, overheat, or shut down. Additionally, human workers and maintenance staff don’t operate too well in such hot environments.

The point is that the interior infrastructure of a data center must be optimally designed to ventilate and shunt away heat to protect its hardware.

One standard method of doing this is the raised floor method, which is in use in almost every data center.

Raised Floors

Think of a raised floor in a data center as the inverse of a drop ceiling. A drop ceiling is a lowered false ceiling that covers a gap between it and the actual ceiling. Drop ceilings may contain wiring and pipes for easier access. They also are sometimes designed to dissuade intruders from breaking in.

An elevated floor is an elevated false floor that covers a gap of a few inches or feet from the true floor. Rows of computer equipment, servers, racks, processing units, and wires are placed on the elevated floor. Meanwhile, under the elevated floor, more equipment or cooling technology is situated to optimize operations.

Think of a data center as a combustion engine inside a car. All of the components of a combustion engine are closely packed and intertwined together. And the longer a combustion engine operates, the hotter the engine becomes.

The longer that the machinery in a data center operates, the hotter it becomes. Some computer companies claim that their equipment can operate in 115-degree temperatures. Still, it may not be optimal to have dozens or hundreds of computer servers closely packed together on the same floor accumulating and increasing collective heat.

Using raised floors distributes equipment, servers, and wires, so machines placed together are collecting increasing ambient heat.

Raised floors can distribute wires, servers, and other pieces of equipment, so they are not in proximity to each other.

Raised floors with perforated tiles can also be used to circulate cool air or other cooling system methods.

Cold or hot air aisles can be piped under the raised floor via intakes to transfer heat and maintain preferred temperatures.

Chiller towers can pump chilled water through thin pipes to cool the air in data center facilities.

Calibrated vector cooling strategically manipulates airflow in high-volume data center equipment while utilizing fewer fans.

The newest method of data center cooling that is becoming more widespread is supercooling liquid. Supercooling liquid is a non-flammable and non-conductive coolant into which computer systems or servers are immersed. So, instead of using air and water to cool off hot equipment, the supercooling liquid cools down immersed equipment chip-to-chip.

Some studies have shown that including a raised floor in your data center infrastructure, along with AI-enabled monitoring, can decrease power costs by 40%.

So, the interior infrastructure of a data center you contract or rent must include raised flooring that can distribute extra equipment, house extra equipment, or cooling equipment.

Servers

The heart of data center operations, and its infrastructure, is arguably the server system. Servers are computers and computer systems that facilitate and provide resources, programs, and data to other computers over vast digital network connections.

Servers take requests, share, and reciprocate resources with other servers and computers called clients or client machines.

In the near past, servers were literal machines or computers. Now, a server can be a literal machine or an advanced computer software program that can be run on multiple computing devices.

And there is now more than one kind of server.

A server can be a machine, web server, virtual server, or mail server. Servers can perform unilateral or multiple tasks simultaneously.

Uninterruptible Power Systems

Heatwaves, regional or localized blackouts caused by outmoded energy grids, and faulty equipment can cause costly, business-disrupting power outages.

Suppose your data center is housed in a metropolitan city or in a remote area where vast amounts of energy must be channeled. In that case, experiencing an occasional power outage is a certainty, not a remote possibility.

Most high-volume and corporate data center systems, which transport the data of billion-dollar companies, incorporate uninterruptible power systems into their infrastructure.

An uninterruptible power system, or a UPS, is a backup power supply system that exists to provide operational continuity insurance for a data center. A UPS is a system of back batteries or generators that will ensure no power interruptions to a data center, even during a blackout.

There are several various kinds of UPS systems. For example, UPS battery units are designed to keep a small-scale data center running for half an hour or more. And there are full-scale data center UPS systems that can provide power for half an hour or several hours during a blackout.

UPS systems can facilitate the even flow of power through a data center facility and even prevent power surges. And UPS systems can also act as insurance against crippling data loss in an abrupt power outage.

A viable UPS system can be the size of a laptop, a server rack cabinet, or an entire floor.

UPS systems are designed to keep a data center running long enough for diesel generators to kick in and commercial utility power to come back on.

Data Redundancy Systems

A data center’s operational functionality and data transport capabilities are worth trillions of dollars to the corporations that depend on them. The most forward-thinking and efficient data system infrastructures will include one or more data redundancy systems.

For example, a UPS system can sometimes be considered a data redundancy system that protects data during power outages. Backup UPS power batteries should always be maintained and ready to deploy to be reliable as a redundancy system.

The most common form of data redundancy in a data system infrastructure is called fault tolerance.

Fault tolerance involves continuously backing up, copying, or mirroring central systems with parallel or identical servers or circuits. Backup plans can kick in if central systems fail via fault tolerance to always ensure continuity of service, business, and data transport.

Power Usage Effectiveness

While regularly keeping power usage effectiveness records is not a part of a data center’s physical infrastructure, it should be. No data center can practically function without regular internal power usage effectiveness audits.

Power usage effectiveness records, also known as PUE, are an operational ratio that differentiates between the amount of power a data center uses and how each component of that data center uses that power. A PUE audit can tell you where and how your data center is efficiently using or wasting energy.

Numeric rankings determine PUE ratios. As long as a data center’s PUE ranking is below 1.5, then it is using its power supply as efficiently as possible with minimal energy and financial waste.

Any PUE ranking over 2.0 requires immediate data center energy use reviews, updates, and operation management overhauls.

If you aren’t constantly aware of your data center’s PUE ranking, your energy expenses may be higher than your revenue generation. And if you don’t maintain energy efficiency with regular PUE audits, you may not have an infrastructure to maintain for long.

Who Manages the Data Center Infrastructure?

Nowadays, data centers use many data center operations management solutions to achieve the core functions of their job. Datacenter operations managers have the responsibility of maintaining and focusing on the upkeep of the infrastructure within the data center. They perform daily tasks such as changing devices designated as obsolete and ensuring the systems are functioning correctly. In addition to these tasks, data center operations managers often design and implement best practices for upholding standards and ensuring timely updates to infrastructure systems.

The group in charge of the maintenance leverage data center infrastructure management solutions to complete the core functions of their job. For instance, they also employ a team of specialists who visit and inspect each data center regularly to ensure the systems are functioning well, such as HVAC.

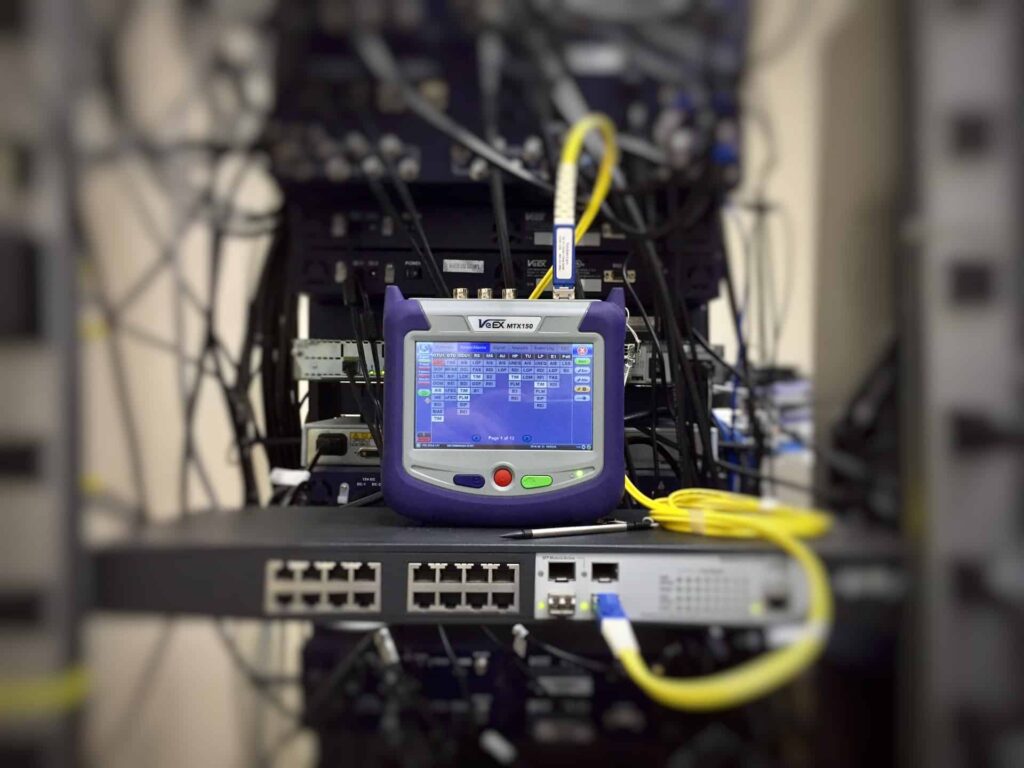

Cabling System

The integrated cabling system is essentially one of the most essential parts of the data center. Cable management is what helps with operations within the data center infrastructure, but it helps keeps everything inter-connected and allows for solid communication. The data center cabling system is based on copper cables and optical cables. These cables and connectors are assembled into a complete wiring system and a distribution unit and are adapted for installation, design, and maintenance. So, what about the application for the wiring system? Well, the characteristics of this can include modularization, high density, and peak reliability.

Related: 8 Data Center Trends Changing the Industry

Cooling System

Data centers are pretty hot, so a cooling optimization must be present. Excessive heat and humidity within the space can cause significant issues, so cooling systems need to be put into place within every data center. It needs to be an integral part of the infrastructure. It’s a good setup for cooling servers by requiring hot and cold controls to be locked together and causing hot and cold around each rack. Creating aisles of hot and cold air causes servers within a given room to remain separate.

Storage Infrastructure

Inside data centers are the technology tools that every business needs. This will include interfaces, solutions, and processes for monitoring storage infrastructure. These include the electronic equipment and software to store and retrieve the data and your application and files. They also include the other appliances that supervise the server-building storage systems that store the data on your computer or company server.

This can include tape drives, hard drives, and a variety of forms of both internal and external storage. This also includes backup management software for external storage as well.

Are you looking for ways to optimize your data center? Then check out our advisory services to see how we can help!

Energy Monitoring

For the most part, the traditional data center often lacks the needed tools for monitoring energy usage. Power efficiency is key, especially for companies that are looking to be more eco-friendly. Everything needs to comply with ASHRAE standards. Monitoring components need to be placed on data power systems to collect pattern-based adequate data. Maintenance teams can come together to create effective strategies and gather needed tools to help monitor energy usage within the data center.

Contact C&C For Data Center Infrastructure Services

Making sense of and incorporating infrastructure relative to your data center needs can be a daunting task.

Don’t do it alone.

If you need assistance setting up your data center infrastructure, contact C&C Technology Group today.

Last Updated on January 27, 2023 by Josh Mahan

![Best data center racks Best data center racks [buyer’s guide]](https://cc-techgroup.com/wp-content/uploads/2021/08/best-data-center-racks-1024x576.jpg)